.

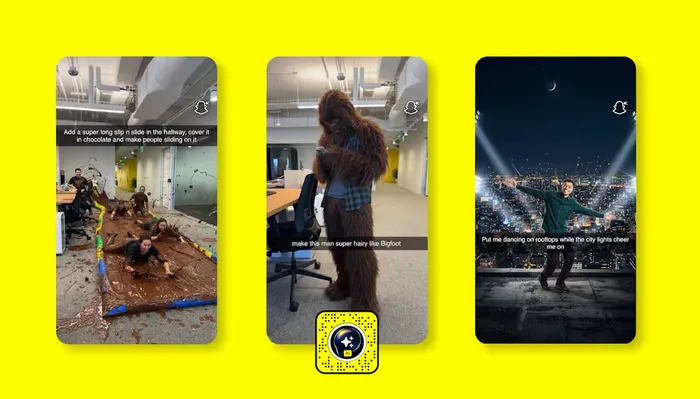

Image: Snap

In a demonstration of how social media platforms are racing to embed generative aesthetics into everyday communication, Snapchat has just freed one of its most advanced creative tools to the masses.

This week, the company is making its Imagine Lens, the app’s first open-prompt image-generation AI Lens, available for all US users at no cost.

Previously available only to paying subscribers (via its Lens+ and Platinum tiers), the Imagine Lens now lets any Snapchat user type in a prompt like “turn me into an alien” or “grumpy cat” and watch the AI transform a selfie or generate a new image altogether.

What’s notable is how the company is embedding prompt-driven image generation directly into the in-camera experience, rather than as a separate tool.

What’s technically interesting about this new feature that that the Imagine Lens is built to accept open text prompts rather than fixed filter choices.

This positions it more akin to the emerging generative-AI paradigm (text to image) rather than the typical AR overlay model, according to Snapchat.

By making it free (at least in limited quantities) to everyone, Snap is reducing the friction for experimentation, which may surface new user behaviours, creative norms and in-app content formats.

In effect, Snap is shifting from curated filter chains toward a lightweight creative platform where text prompts + camera capture yield shareable visuals.

For Snap, the move is about more than just fun filters.

According to the company, Snapchat Lenses are used more than 8 billion times daily.

Layering open-prompt image generation onto that scale means millions of users now have the power to generate bespoke visuals, not just pick from predefined filters.

Making the tool free now suggests that Snap is prioritising adoption and usage over generating immediate revenue.

The move comes as more and more social platforms are under pressure to stay relevant with younger, creative users who expect more than static photo sharing. By giving users access to AI-powered image creation, it is clear that Snap is trying to fend off competition from players offering advanced generative tools. Rather than users bringing external image-editing tools into the app, Snap is bringing generative capability into the camera interface. That matters when the interface is your primary social canvas.

There could be a global rollout, with Canada, the UK and Australia next in line to get the new lenses feature.

Here’s a quick look at what other major players are doing in the same space:

OpenAI – Sora:

OpenAI’s text-to-video model “Sora” enables users to generate short videos from text prompts. OpenAI plans to integrate this into its chatbot ecosystem, making the jump from still images to moving-image generative social media.

Meta Platforms – Meta AI Image Generator

Meta offers a generative-image tool within its chat ecosystem: users can type something like “Imagine me as a gladiator” and get personalised AI images. Meta is embedding it into Messenger, Instagram and Facebook, making generative visuals part of mainstream social chat.

Google DeepMind – Veo

Google’s Veo model (text-to-video) shows how generative AI is racing toward higher fidelity video creation, not just stills.

Beyond the major platforms, a host of creators and startups, such as Luma Labs, Runway and Veed, are building generative-video or image tools for social, marketing and creator workflows.