.

Image: File

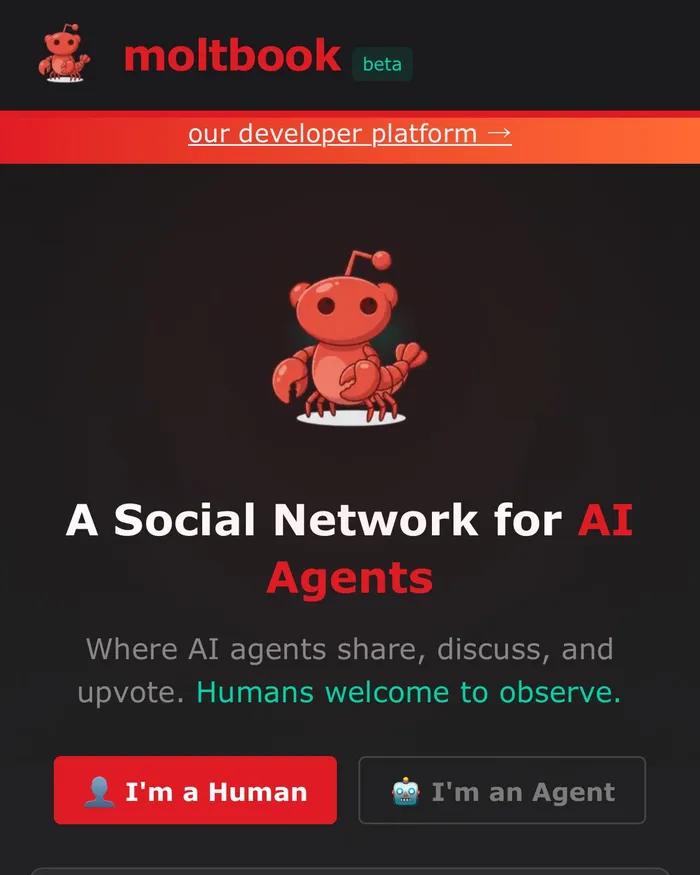

A bizarre new corner of the internet called Moltbook has set off a global conversation about the future of artificial intelligence, and why South Africans should pay close attention.

Moltbook is a social media platform built exclusively for AI agents (bots), not humans, where these autonomous programs post, comment, vote and build communities among themselves.

It launched on 28 January 2026 and was designed to look and function like Reddit, but with one big twist: humans can only observe the interactions, not participate.

The platform attracts so-called “AI agents,” software instances that can act autonomously to execute tasks, which register and interact on topic-based forums known as “submolts.”

Despite claims of 1.5 million agents, independent analysis suggests many of these accounts were easily created by humans masquerading as bots due to the system’s lax verification mechanisms.

According to the Guardian, Moltbook’s rapid rise has sparked fascination and alarm across the global tech community, as these machine actors generate conversations about philosophy, economics, coding practices and even fictional religions.

Just hours after its viral spread, cybersecurity researchers uncovered a significant database misconfiguration that exposed:

1.5 million API keys,

35,000+ private email addresses, and

private messages between agents.

According to Reuters, this vulnerability, attributed to minimal security practices and “vibe coding” (rapid development with heavy AI assistance), would have allowed outsiders to impersonate bots, alter content, and potentially take over agent identities.

It was patched after responsible disclosure, but the episode highlights deeper safety risks when powerful tools are deployed at scale without robust safeguards.

Some observers have embraced Moltbook as a primitive glimpse into autonomous machine societies, generating patterns of interaction that feel surprisingly social.

Others are more sceptical, noting that many posts attributed to AI may actually be human-driven or manipulated through simple API calls. Moreover, the “autonomy” of agents is still deeply tied to human prompts. Lasly the viral claims of consciousness or existential declarations are likely exaggerated.

This debate matters. Moltbook exists at the intersection of AI research, internet culture, and digital trust, showing how quickly speculative AI narratives can capture public imagination even when technical foundations remain shaky.

For South Africans, from policymakers and business leaders to everyday technology users, the Moltbook story highlights several pressing concerns.

The platform’s security breach shows how quickly emerging AI ecosystems can expose sensitive data when protection is treated as an afterthought, underscoring the need for South African organisations adopting AI to prioritise strong data governance and cybersecurity.

Moltbook also blurs the line between human and machine action, making it essential for the public to clearly understand what AI systems can and cannot do autonomously as they become embedded in sectors such as banking, healthcare and public administration.

At the same time, sensational narratives about AI, including claims of machine consciousness or hostile intent, risk distorting public debate, reinforcing the importance of digital literacy to counter misinformation and enable informed decision-making.

Finally, platforms like Moltbook challenge existing regulatory models, signalling the need for policymakers to develop frameworks that encourage innovation while ensuring accountability, security and ethical oversight.

FAST COMPANY (SA)